A new video, the second of a series that attempts to clearly explain an area of cognitive error and how we can work to avoid it. A resource for objectivity training!

Category Archives: Critical Thinking

Objectivity training

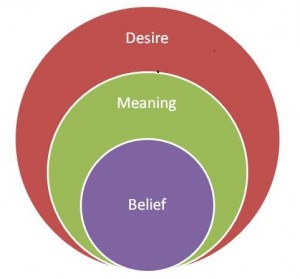

In my account of practice in Middle Way Philosophy, I have identified three (interlinked) kinds of practice area, which I call integration of desire, integration of meaning, and integration of belief. To integrate desire the central practices will be meditation, or other practices involving body awareness. To integrate meaning the central practices are likely to be the arts. To integrate belief, so far I have often focused on critical thinking as a central practice. To distinguish these types of integration and link them together in this way seems to involve a distinctive emphasis: but still, the practices of meditation and the arts themselves are widely recognised as beneficial in all sorts of ways. I have been thinking more recently of the third area as one on which I need to focus, because, although it has all kinds of links to academic practice, it is rarely either seen as integrative or linked effectively to the other types of integration. Instead, the habit of many people seems to be to fence these areas off as ‘intellectual’ concerns of interest only to a minority of boffins. The whole area of working with awareness of our beliefs and judgements, perhaps, needs a re-presentation.

I have been thinking more recently of the third area as one on which I need to focus, because, although it has all kinds of links to academic practice, it is rarely either seen as integrative or linked effectively to the other types of integration. Instead, the habit of many people seems to be to fence these areas off as ‘intellectual’ concerns of interest only to a minority of boffins. The whole area of working with awareness of our beliefs and judgements, perhaps, needs a re-presentation.

Lately I have hit on a label for the whole enterprise that might be helpful in doing this. ‘Critical thinking’ has not really been doing that job well for several reasons. People tend to associate being ‘critical’ with narrowness and negativity, which obscures the ways in which it can help one cultivate the reverse. Critical Thinking courses are also often narrowly focused on the structure of reasoning and mistakes we can make in reasoning: which are good things to study, but they miss out the whole important psychological dimension of the integration of belief. What should we call the whole study and practice of mistakes we make in thinking (not just in ‘reasoning’, but also in our emotional responses and the attention we bring to our judgements)? It has struck me recently that ‘objectivity training’ might be a better term to use for this. The term ‘objectivity’, of course, is also still used by too many philosophers and scientists to mean a God’s eye view or absolute perspective of a kind we can’t have as embodied beings, but surely if it is paired with ‘training’ it’s going to be reasonably obvious that we’re talking about helping people to make incremental progress in avoiding delusion and facing up to conditions?

So how can you train someone to be more objective? By helping them to be aware of the kinds of cognitive errors we are all inclined to make, and helping them to train attention on such errors. That’s never going to provide any guarantee that your judgement is right, but it can help us avoid certain easily identifiable sources of wrongness that we can all easily fall into through lack of attention. If you can avoid the errors, you will then have a more trained judgement, and that will give you a much better probability of making adequate responses to whatever the ‘reality’ we assume to be out there may throw at us.

There’s lots of evidence available of what those errors are, and why human beings generally tend to share them. That evidence comes from both philosophy and psychology, and one of the distinctive things I want to do is to fit the contributions of both these studies together as seamlessly as possible, with Middle way Philosophy as the stitching thread. The kinds of errors we make can be encountered in three categories:

- Cognitive biases as identified in psychology (or, to be more precise, the elements of cognitive biases that we can do something about, and that are not just unavoidable aspects of the human condition): for example, actor-observer bias or anchoring

- Fallacies, particularly informal fallacies, as identified in philosophical tradition: for example, ad hominem or ad hoc argument

- Metaphysical beliefs in dualistic pairs, as recognised and avoided in the Middle Way : for example, realism and idealism, or theism and atheism

In the end I think there is no ultimate distinction between these categories, and they often mark the same kinds of errors that have just been investigated and understood from different standpoints. What I think they have in common is absolutisation: the tendency for the representation of our left brain hemisphere to be accompanied by a belief that it has the whole picture and that no other perspectives are worth considering. We can absolutise a positive claim or a negative claim, but when we do so we repress the alternatives.

For the full argument as to how absolutisation links all these kinds of errors, you’ll have to see my book Middle Way Philosophy 4: The Integration of Belief, which makes the case in detail. However, the main reason for focusing on absolutisation is the practical value of doing so. Objectivity training is a training in bringing greater awareness to spot those absolutisations when they happen, realise they are not the whole story, and open our minds to the alternatives before we make mistakes, entrench ourselves in unnecessary conflict, and fail to solve the problems we encounter due to an inadequate engagement with their conditions.

What does objectivity training actually involve? Well, it’s something I want to focus on developing further and testing out in the next few months or years. Here are the general lines of what I envisage though:

- Becoming familiar with cognitive biases, fallacies and metaphysical beliefs – not just as isolated abstractions, but in relation to each other

- Developing understanding of why these errors are a problem for us in practice

- Becoming familiar with examples of these errors

- Identifying these cognitive errors in actual individual experience: this might involve reflection on past experience, critical reading and listening, observation of others with feedback, anticipating contexts where errors will take place, development of attention around judgement through trigger points and general use of mindfulness practice.

This is not just ‘logic’: rather it focuses on the assumptions we make. Though some of the mistakes may be identifiable as errors of reasoning, most will not, and part of the problem may well be that our narrow position seems entirely ‘rational’ to us. Nor is it just therapy, though it may have some elements in common with cognitive behavioural therapy. It needs to be an autonomous aspect of individual practice, rather like meditation and mindfulness (with which it needs to have a near-symbiotic relationship).

I think it’s really important that more people in our society learn these kinds of skills. They can be applied to our judgements everywhere: in politics, in business, in personal relationships, in spending judgements as consumers, in scientific judgement, in our attitudes to the environment, in our judgement about the arts, in leadership, in education and so on. In some of these areas, such as environmental judgement, we very urgently need to improve people’s judgement skills.

I think there is huge potential for this type of training, which is why I want to focus on developing it. But the other forms of integrative practice are also very important, which is why I hope that other people will emerge in the society who are able to take more of an effective lead in developing and teaching distinctive Middle Way approaches to meditation and the arts.

Responsibility

A new talk edited from the 2014 Summer Retreat, (and on a theme very relevant to this year’s retreat, on ethics). How can we find a Middle Way between assuming ourselves (and others) are totally responsible for what they do, or on the other hand assuming no responsibility?

The MWS Podcast 65: Bjorn Ihler on surviving Utøya, resolving extremism and the Middle Way

We are joined today by Bjorn Ihler , who is a peace activist, writer and filmmaker working against dogmatism, particularly in the forms of racism, hatred and violent extremism. His work is greatly founded on his experiences as a survivor of the attack on Utøya island in Norway in 2011. He’s written numerous articles for national and international newspapers and has been an inspiration to many activists through participation in conferences and organisations such as the Oslo Freedom Forum, Against Violent Extremism and the Forgiveness Project. Bjorn has a degree in Theatre and Performance Design and Technology from the Liverpool Institute for Performing Art In 2013, he collaborated in the writing of the play ‘The Events’ by David Greig, an account of a survivor resembling the Utoya attack and he’s is currently producing the film ‘Rough Cut – Genocide and Crimes Against Humanity’. Bjorn recounts the events of July 22nd 2011 and its repercussions. We discuss the roots of extremism, how education can play a key role in terms of addressing the problem, especially critical thinking and how all this might relate to the the Middle Way.

MWS Podcast 65:Bjorn Ihler as audio only:

Download audio: MWS_Podcast_65_Bjorn Ihler

Critical Thinking 15: The Texas Sharpshooter Fallacy

The Texas sharpshooter fallacy is one of the most amusing fallacies in Critical Thinking: perhaps because it is based on a story. The Texas sharpshooter is a man who practices shooting by putting bullet-holes in his barn wall: then, when there is a cluster of holes in the wall, he draws a target around them. To commit the Texas Sharpshooter Fallacy, then, is to fit your theory to a pre-existing pattern of coincidences.

This video is an advert for a book, but presents the fallacy rather well:

The cognitive bias that might lead us into the Texas Sharpshooter Fallacy is called the Clustering Illusion. If we see a cluster of something (bullet holes, cancer cases, high grades in exams, letters used in a text) we have a tendency to assume that this cluster must be significant. Of course, it might be, but then it might not be. I think the fallacy becomes a metaphysical assumption about a ‘truth’ in the world around us when we assume that the pattern must be there, rather than just holding it provisionally as a possibility. Usually we need to look at a lot more evidence and continue to see the same pattern before we can justifiably conclude that the theory that explains it.

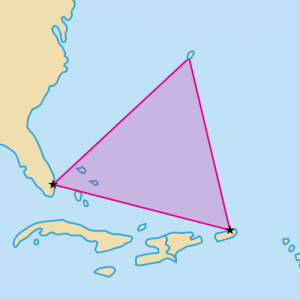

The Middle Way is applicable here because we need to avoid either, on the one hand, jumping to absolute conclusions about the significance of patterns we encounter, or, on the other, assuming that everything is necessarily random and any theories used to explain any pattern must be false. It may be important to accept consistent patterns of evidence even if they don’t amount to a certainty: the evidence for global warming is one example of that. On the other hand, the patterns of evidence used by those who argued that 9/11 was a conspiracy set up by the US or Israeli governments (see Wikipedia article) could point to only much more limited evidence. For example that Israeli agents were discovered filming the 9/11 scene and not apparently being disturbed by it is a pattern that would be consistent with an Israeli plot, but only a very small part of a pattern for which all the other elements are missing. A great deal more has to be assumed to support any of the 9/11 conspiracy theories: plausibility within a limited sphere is not enough.

This fallacy links with a number of others: for example the similar ad hoc reasoning (also known as the ‘No True Scotsman’ Fallacy) where someone refuses to give up a theory that conflicts with evidence but keeps moving the goalposts instead, and post hoc reasoning that assumes that when one thing follows another the first must cause the second. Post hoc reasoning can be seen as a version of the Texas Sharpshooter, because a pattern of correlation is being identified that is assumed to be causally significant when it may be a matter of coincidence. See the Spurious Correlations website for some hilarious examples of this. The divorce rate in Maine correlates with the per capita consumption of cheese: are depressed Maine divorcees binging on cheese?

Exercise

How would you judge the following patterns? Are they evidence that could be used to support a theory, or just a small pattern of coincidences?

1. A number of bird droppings on the roof of your car appear to form a letter ‘F’.

2. A number of unsolved disappearances of ships and planes have occurred within the area of Atlantic known as the Bermuda Triangle. See Wikipedia.

3. During the Apollo 8 mission to the moon, astronaut Jim Lovell announced “Please be informed, there is a Santa Claus”..

4. The first peak of the Spanish Flu outbreak occurred at almost exactly the same time as the armistice of 11/11/18 ending the First World War (see graph).

5. Deaths by shark attack tend to peak and trough at the same time as ice cream sales.